Small Data: The Next Big Thing?

In a May, 2021 press release, Gartner asserted that, by 2025, 70% of organizations will change their orientation from big to small and wide data, providing more context for analytics and making artificial intelligence (AI) less data-hungry. “Taken together, they can use available data more effectively, either by reducing the required volume or extracting more value from unstructured, diverse data sources,” said Jim Hare, distinguished research vice president at Gartner. Small data is an approach that requires less data but still offers valuable insights. Wide data enables the analysis and synergy of various small and large, unstructured and structured data sources. The following provides some views for the discussion as to why this trend is gaining momentum.

AI Tools and Project Segmentation Are Crucial Factors

Today, AI is empowering almost all industry segments. Data generation, cloud storage, affordable computing and commoditized algorithms have taken center stage in AI activities. But when it comes to current machine learning initiatives, decision-makers who provide the budgets for these initiatives see their interest and trust waning. According to a US Census Bureau 2020 report, just under 9% of all major US companies use AI productively. Several industry reports put the failure rate of large-scale AI projects at anywhere between 70% and 80%.

In contrast, picking a small project to get started can show demonstrable progress and ensures that one has the right team to solve the problem. To reach this goal – at the very least – a company needs to have a data scientist and a machine-learning engineer. According to the US Bureau of Labor Statistics, there is a need for 11.5 million data science professionals by 2026 in the United States alone, compared to the three million currently available. According to the report, the low AI adoption is not due to a lack of willingness but to three significant challenges:

- Complexity of AI technology.

- Affordability.

- Unavailability of the right talent.

Rather than following the “old” path of managing big data with big projects, a different mind-set is necessary in two respects: First, break down the project into smaller segments and select out-of-the-box tools that match your business needs best. The market for quality tools is rapidly expanding. The companies providing consultancy services to install and maintain these tools are also growing and significantly reducing the workforce bottleneck in small- to mid-sized corporations.

The corporate inventory might also include tools that rely on giant data sets, such as the transformer models to build Natural Language Processing (NLP) applications (voice bots for customer support). If implemented successfully, this tool strategy will strengthen the human factor within the human–machine relationship for solving problems. Likewise, this process will foster creativity and innovation as humans have more time to think and reflect.

A Neural Analogy to Creativity

A new fMRI study conducted by researchers at UCLA in 2022 suggests that creativity in artists and scientists is related to random connections between distant brain regions using a vast network of rarely used neural pathways. “Our results showed that highly creative people have a unique brain connectivity that tends to stay off the beaten track. While non-creatives tended to follow the same routes across the brain, highly creative people made their own roads”, Ariana Anderson of UCLA’s Semel Institute for Neuroscience and Human Behavior said in a news release. As a result of this latest neuroimaging study, it appears that creativity blossoms when people get off the beaten path inside their brains. Although creativity has been studied for decades, little is known about its biological bases. And even less is understood about the brain mechanisms of exceptionally creative people, said senior author Robert Bilder, director of the Tennenbaum Center for the Biology of Creativity at the Semel Institute.

This uniquely designed study included highly creative people representing two different domains of creativity – visual arts and the sciences. The study was based on an IQ-matched comparison group to identify markers of creativity, not just intelligence.

Exceptional creativity was associated with more random connectivity, a pattern that is less ‘efficient’ but would appear helpful in linking distant brain nodes to each other.

Robert Bilder

Bilder, who has more than 30 years of experience in researching brain-behavior relationships, said, “The fact that Big C [highly creative] people have more efficient local brain connectivity may relate to their expertise. Consistent with some of our prior findings, highly creative people may not need to work as hard as other smart people to perform certain creative tasks.”

To draw an analogy, one could conclude that the quality of AI tools applied in a corporate setting plus an orientation towards small data does raise the level of creativity and innovation with more time available to think and reflect.

From Big to Small

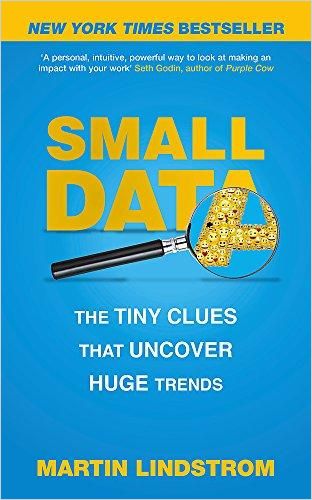

Most of the past 10 years’ attention in AI research has been focused on big data to fuel the development of data science and machine learning. Big data applications are beneficial, such as smart electric grids, autonomous vehicles, money laundering and threat detection, to name just a few. However, the energy spent on big data may obscure something we intuitively know. Martin Lindstrom’s Small Data relates to seemingly insignificant observations that disclose people’s subconscious behavior. Lindstrom’s book solidifies that observation and research are essential when building a product or service.

It also underlines the need for empathy in design and confirms that “design thinking” is a critical part of a product and service design process. As 85% of our behavior is subconscious, small data provides clues to the causation and hypotheses behind that behavior. Compared to small data, big data is analytical data that correlates information. But:

As prominent data analysts apply random search in billions of data resources, the results are imprecise, mainly because of inbuilt biases. By using small data, one can find the imbalances in people’s lives that represent a need and, ultimately, a gap in the market for a new brand.

One example is Lindstrom’s theory that Amazon will fail if they attempt to open unemotional, shelf-type bookstores because they will not embed themselves in the community. They will not pick up on the clues as independent booksellers do, or as Lindstrom says: “Where big data is good at going down the transaction path if you click, pick and run, you could say that small data is fuelling the experiential shopping, the feeling of community, the feeling of the senses – all that stuff you cannot replicate online.” Lindstrom’s premise is quite compelling, raising doubts about the efficiency of big data predictive analytics. The more data one has, the more likely the AI model does not understand its context or causality. Hence, it is no surprise that the “bigger is better” scenario is coming under scrutiny while “small is beautiful” gains traction.

The Value of Small Data and the Human Factor

According to Accenture, more than three-quarters of large companies today still have a ‘data-hungry’ AI initiative underway – projects involving neural networks or deep-learning systems trained on vast data repositories. For large companies to adapt to a new, disruptive mind-set takes time. Yet, many of the most valuable data sets in organizations are pretty small – kilobytes or megabytes rather than exabytes. Because this data lacks the volume and velocity of big data, it is often overlooked and unconnected to enterprise-wide IT innovation initiatives.

In a recent study published by Harvard Business Review, “Small Data Can Play a Big Role in AI,” James Wilson and Paul Daugherty describe the results of an experiment they conducted with coders working on health care applications. They conclude that emerging AI tools and techniques, coupled with careful attention to human factors, open new possibilities to train AI with small data. With every large data set (one billion columns and rows or more) used in large AI projects, a thousand small data sets may go unused. What Wilson and Dougherty learned throughout the experiment is that creating and transforming work processes through a combination of small data and AI requires close attention to human factors based on three human-centered principles that can help organizations get started with their small data initiatives:

- Balance machine learning with human domain expertise – Several AI tools exist for training AI with small data. For example, zero-shot learning tools with only a few examples instead of hundreds of thousands or transfer-learning tools to move knowledge gained from one task to learning new tasks. Both tools, however, rely on human expertise in classifying the reduced data sets.

- Focus on the quality of human input, not the quantity of machine output – In the existing system, coders focused on assessing high quantity data. In the new system, coders were encouraged to focus less on volume and more on instructing the AI on how to handle a given drug-disease, for example, by providing a link to a corresponding website.

- Recognize the social dynamics in teams working with small data – In their new roles, the coders quickly came to see themselves not just as teachers of the AI but as teachers of their fellow coders. Most importantly, they saw that their reputations with other team members would rest on their ability to provide solid rationales for their decisions.

The application of AI tools enhances the human factor in human-machine systems and frees up the resources needed to build a mind-set of collaborative intelligence. The continuing rise of complexity induced by the exponential growth in research and technology can only be handled by individuals who are willing to share their expertise to keep their organization competitive vis-à-vis the demands of a continuously changing market. The application of tools is, and always will be, a resource for improving productivity. Yet, and most importantly, data is not an end in itself, but a means to an end.

Next Steps:

Visit singularity2030.ch for additional articles on the topic.