Deepfakes: Can AI Restore Trust?

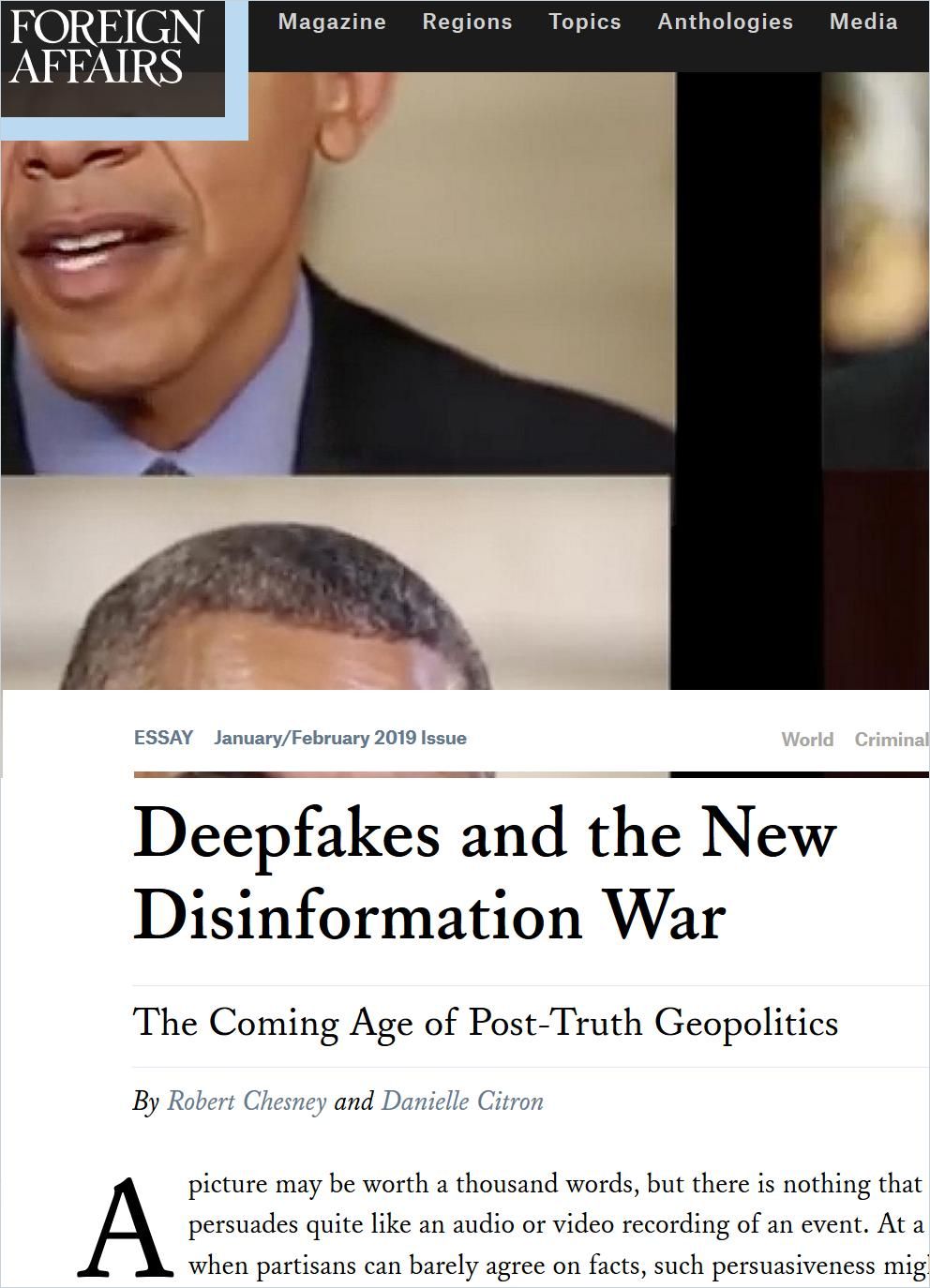

Deepfake techniques provide realistic AI-generated pictures and video sequences of people communicating via TV or computer screen. They have the potential to significantly impact how people determine the legitimacy of information presented online. These content-generation and modification technologies may affect the quality of public discourse and the safeguarding of human rights – especially given that deepfakes may be used maliciously as a source of misinformation, manipulation, harassment and persuasion.

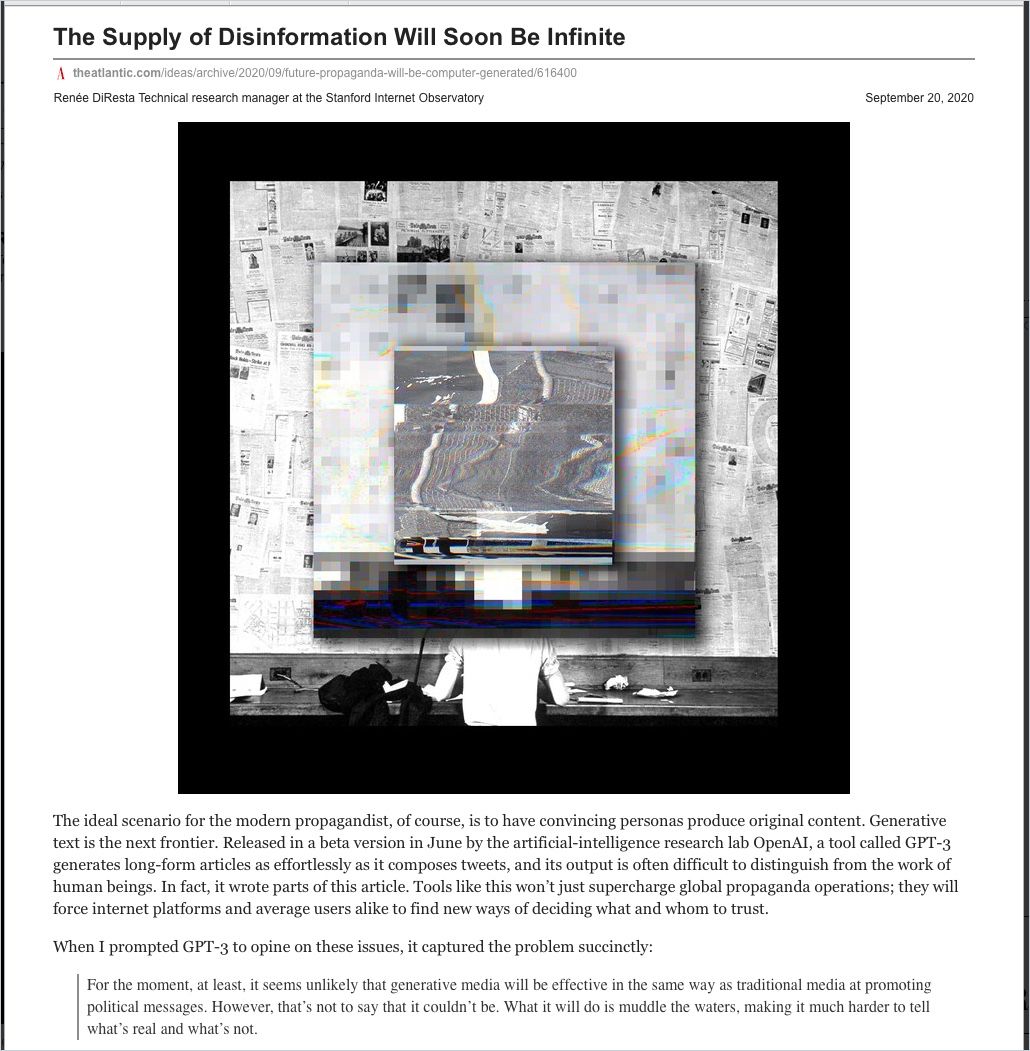

While we are already exposed to a wave of deepfaked pictures and video sequences (and their broad impact on pop culture), another form of AI-generated media is emerging: Synthetic text and its potential to generate deepfaked text is harder to detect and yet much more likely to become a pervasive force on the internet.

GPT-3, recently released by Open AI, can produce passages of text on a selected topic indistinguishable from text written by humans. Instead of going through the hard work of creating content and building credibility through a growing audience, a website can be artificially grown and boosted using AI-generated content.

Within hours, a deepfaked news website can achieve top-level ranking, provided enough deepfaked text bots can create content that links back to its website.

This new technology uses AI to analyze text distributed over the entire internet to generate detailed and realistic responses to questions submitted, giving the impression that an exchange between humans is taking place. As a result, algorithms launched to scan the web in search for “opinions,” subsequently could publish their own machine-generated responses.

This corpus of new content and comments, largely manufactured by machines, could then be processed by other machines, leading to a feedback loop that could significantly alter our information ecosystem. While our trust in each other is diminishing and polarization is increasing, the detection of deepfakes becomes more challenging. Consequently, we will find it increasingly difficult to trust the content we see on our screens.

How to Recognize Deepfakes?

Identifying manipulated media is a technically demanding and rapidly evolving challenge that requires collaborations across the entire tech industry and beyond.

How to Spot a Deepfake like the Barack Obama–Jordan Peele Video

BuzzfeedAI techniques are being developed to detect and defend against synthetic and modified content. In order to address this challenge, the organization Partnership on AI, which represents almost all major companies engaged in AI technology, has formed a Steering Committee comprising civil society organisations, technology companies, media organizations and academic institutions with the intent to strengthen the research landscape related to new technical capabilities in deepfake detection.

Coordinated by this Steering Committee, Amazon Web Services (AWS), Facebook and Microsoft have created the Deepfake Detection Challenge (DFDC) with $1 million USD in prize money for the best entries. The goal of the challenge is to spur researchers around the world to build innovative new technologies that can help detect deepfakes and manipulated media.

The launch of the DFDC took place in December 2019, with a closing deadline in March 2020. Over the course of four months, more than 2,000 participants submitted over 35,000 fake-detection solutions. Challenge participants had to submit their code into a black box environment for testing, with the option to make the code of their submission publicly available. Time will tell which of the winning proposals will come to market. The large number of entries suggests that the interest to solve the deepfake problem is significant.

However, one must keep in mind that this first DFDC only addressed the use of software tools to detect deepfakes made up of photos or video sequences. Considering that this problem has been around for quite some time, and generates a lot of media attention, it is astonishing that it took so long for the AI industry to react.

Moreover, with the emergence of deepfaked text with its destructive potential, one can conclude that the AI industry, despite some possibly well-meant intentions, is not capable or ready for serious self-regulation. The consistently growing volume of internet traffic is generating income across the entire AI value chain. High-tech companies with huge financial resources have emerged, monetizing data on individuals’ personalities and behaviour. Heavily investing in AI technology, they are strengthening their position, cleverly manoeuvring through the restraints of antitrust law. To make the deepfake problem a top priority requires strong governmental action and public pressure as the population has no option to roll back its dependency on internet communication.

Theories of “Truth”

Truth is usually held to be the opposite of falsity. The concept of truth is discussed and debated in various contexts, including philosophy, art, theology and science. Over the past centuries, many concepts of truth have emerged.

Most commonly, truth is viewed as the correspondence of language or thought to a mind-independent world.

Called the “correspondence theory of truth,” with prominent supporters such as Bertrand Russell or Ludwig Wittgenstein, the theory maintains that the key to truth is a relation between a proposition and the world – a proposition is true if and only if it corresponds to a fact in the world.

The correspondence theory therefore anchors truth in reality; this is its power, but also its weakness. The theory may also be regarded as the “common sense view of truth.” Reality is the truth-maker while the idea, belief or statement is the truth-bearer. When the truth-bearer (an idea) matches the truth-maker (reality), they are said to stand in an “appropriate correspondence relationship,” where truth prevails.

The Era of “Post-Truth”

In 2016, against the backdrop of the Facebook–Cambridge Analytica data scandal, manipulating the United States presidential elections and the United Kingdom referendum on the withdrawal from the European Union (Brexit), the new and rather obscure term “post-truth” became prevalent. It was for this reason that Oxford Dictionaries selected it as the “Word of the Year” and defined it as “a term relating to circumstances in which objective facts are less influential in shaping public opinion than appeals to emotion and personal belief.” So, what is the post-truth phenomenon and what is new about it?

The historian Yuval Noah Harari suggests that the first point we need to know about fake news is that it is an old phenomenon and that at the outset of the 21st century, truth is not in worse shape than it was in previous periods. The post-truth phenomenon typifies Homo sapiens and is rooted in an ability to create stories and fabrications and then believe them – the myths, religions and ideologies that enable the creation of cooperation and ties between complete strangers. According to Harari, Homo sapiens have always preferred power over truth and have invested more time and effort in ruling the world than in trying to understand it.

What makes the current trend of fake news different is technology which enables us to tailor propaganda on an individual basis and match the lies to the individual’s prejudices. Trolls and hackers use big data algorithms to identify each person’s unique weaknesses and tendencies, and then fabricate stories consistent with them. They use these stories to reinforce the prejudices of those believing in them, to exacerbate the rifts in society and to puncture the democratic system from within.

American philosopher Daniel Dennett said during a recent interview with British journalist Carole Cadwalladr that humankind is entering a period of epistemological murk and uncertainty, such that we have not experienced since the Middle Ages. According to Dennett, the real danger before us is that we have lost respect for truth and facts and have lost the desire to understand the world based on facts.

Where to Go from Here?

AI technology will provide tools to detect deepfakes yet there will be counterefforts to bypass this detection. In this technology-based “cops and robbers” scenario, to distinguish fake from reality with the danger of being manipulated, we have to resort to consciousness and common sense for the protection of our identity and personality.

Both common sense and consciousness are outside the realm of today’s AI technology and it is questionable if AI will ever overcome this last hurdle to achieve human-like intelligence. Consequently, to regain trust we have to advance to a philosophical level of the discussion. Moreover, to restore truth, we might have to adapt to completely new mind-sets governing our relationship to facts.

At some point, we might ask the question if there is any compelling and inevitable reason why this technology of generating deepfakes should exist.

A technology that makes it impossible to believe anything ever again and automates core human activities, diminishing the application of creativity as human’s most valuable asset.

A Task for Humans

AI cannot restore trust; this is a task for humans to accomplish. In an interview with Bill Moyers in 1988, philosopher Martha Nussbaum, considered one of the most remarkable minds of our time, stated that the language of philosophy has to come back from the abstract heights on which it so often lives to the richness of everyday discourse and humanity: “To be a good human being is to have a kind of openness to the world, an ability to trust uncertain things beyond our own control.” Due to the unprecedented influx of internet communication and AI technology in our daily lives, humans have become “sandwiched” between manipulation and trust. To get out of the position of being manipulated, we must resort back to common sense – and most of all our capacity to think.

Next steps

Read our article on the spread of misinformation and its costs:

Learn more about AI and its usability: