GPT-4: A First Step Towards Artificial Superintelligence?

On March 22, 2023, based on experiments with an early version of GPT-4, Microsoft researchers reported the results of their investigation, claiming that it exhibited more general intelligence than previous AI transformer models. Given the breadth and depth of the capabilities of GPT-4, displaying close to human performance on various novel and complex tasks, the researchers conclude that it could reasonably be viewed as an early yet incomplete version of Artificial General Intelligence (AGI). However, the researchers also pointed out that many regulatory and ethical issues must be resolved to take advantage of this technology and avoid severe socio-economic problems.

From GPT-4 to Artificial Superintelligence

Artificial superintelligence can be defined as any intellect that greatly exceeds the cognitive performance of humans in virtually all domains of interest. Artificial superintelligence does not yet exist, and it represents a hypothetical state of AI. The intention behind it is to surpass human cognitive capacity, which is held back by the chemical and biological limits of the human brain. A few experts are skeptical that it ever will exist, and some have also raised concerns that it could threaten humanity. However, many AI researchers believe that creating artificial superintelligence is inevitable.

They generally compare it to AI and AGI with two key differences. On one side, AI is defined as a narrow AI that serves a dedicated purpose. It exists and is widely used as a tool to increase productivity. It encompasses a range of intelligent machine applications that can perform tasks requiring human-level intelligence with specialized hardware and software to create new machine-learning algorithms. In contrast, AGI is often referred to as strong AI. It has not yet been achieved. Its goal is to enable machines to perform any tasks that require the cognitive abilities of a human being. It consists of several human assets, including consciousness, decision making and sensory perception, and is considered the prerequisite for achieving artificial superintelligence.

When Will AI Reach Superintelligence?

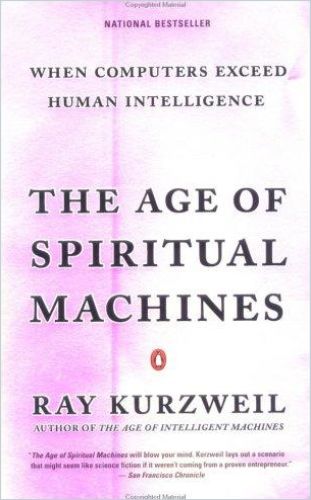

In a recent interview conducted by Futurism, Shane Legg, co-founder of Google’s DeepMind artificial intelligence lab, confirmed his prediction made already a decade ago. Based on Ray Kurzweil’s bestseller The Age of Spiritual Machines, stating that computational power and the quantity of data would grow exponentially for at least a few decades, Legg is convinced that his prediction is still valid.

However, in his view, there are two limiting factors for his prediction to be realized:

- The first is that definitions of AGI rely on characteristics of human intelligence, which are difficult to test because the way we think is complicated. “You will never have a complete set of everything that people can do, for example, processes like developing episodic memory or the ability to recall complete ‘episodes’ that happened in the past,” Legg said. But if researchers can develop tests for human intelligence with an AI model representing it, then AGI has been reached.

- The second limiting factor, Legg added, was the ability to scale AI training models upwards, an essential point given how much financial and human resources AI companies are already investing to generate large language models like OpenAI’s GPT-4.

Asked where he thought we stand today on the path towards AGI, Legg said that existing computational power is sufficient and that the first unlocking step would be to start training AI systems with data beyond what a human can experience in a lifetime. That said, Legg reiterated that there is a 50 percent chance that researchers will achieve AGI before the end of this decade, but he “is not going to be surprised if it does not happen by then.”

Reasons Why Superintelligence May Be Extremely Dangerous

According to an article in Scientific American, reasons are given why AI algorithms will soon reach a point of rapid self-improvement that threatens our ability to control them and poses a significant potential risk to humanity. “The idea that this stuff could get smarter than people… I thought this was way off… obviously, I no longer think that way,” Geoffrey Hinton, one of Google’s top AI scientists, also known as ‘the godfather of AI,’ said after he quit his job in April. He is not the only one worried.

A 2023 survey of AI experts found that 36 percent fear that AI development may result in a ‘nuclear-level catastrophe.’ Written and published by the Future of Life Institute, almost 28,000 individuals have signed an open letter, including Apple Co-Founder Steve Wozniak, Elon Musk, many CEOs, high-level executives, and members of AI-focused companies and research units. With this letter, they request a six-month pause or a moratorium on new advanced AI development.

Why are they so deeply concerned? In short, following their reasoning, AI development is going way too fast. When superintelligence is achieved, AI can improve itself with no human intervention. In a US Senate hearing on the potential of AI, even Sam Altman called regulation ‘crucial.’ Once AI can improve itself beyond human intelligence, we cannot know what AI will do or how we can control it. We will not be able to hit the off-switch because superintelligent AI will have thought of every possible way we might do that and taken actions to prevent being shut off. Any defenses or protections we attempt to build into these AI ‘Gods’ will be easily anticipated and neutralized once AI reaches superintelligence.

What a year ago seemed science fiction should now be considered a realistic scenario.

OpenAI’s Vision of AGI and the Transition Towards Superintelligence

Summarizing an essay written by Sam Altman, (now, again) CEO of Open AI, the mission of OpenAI is to ensure that AI systems that are smarter than humans benefit all of humanity. If AGI is successful, its technology could turbocharge the global economy and support the discovery of new scientific knowledge. AGI has the potential to give everyone incredible new capabilities in a world where we all have access to help for almost any cognitive task. However, AGI also comes with a severe risk of misuse and societal disruption.

Because the upside potential of AGI is so great, many developers do not believe it is possible or desirable for society to stop its development. Instead, society and the developers of AGI must figure out how to get it right. As a result, they are becoming increasingly cautious with creating and deploying their models. Future decisions will require much more caution than society usually applies to new technologies and more caution than many users would like to see. Some people in AI research think the risks of AGI and successor systems are fictitious. Everyone would be delighted if they turn out to be correct, but operating as if these risks exist is advisable.

The first AGI will be just a point along the continuum of intelligence.

We think it is likely that progress will continue from there, possibly sustaining the rate of progress we have seen over the past decade. If true, the world could significantly differ from today’s, and the risks may be extraordinary. A misaligned superintelligent AGI could cause serious harm as it is capable enough to accelerate its progress without human control. Successfully transitioning to a world with superintelligence is perhaps one of the most critical projects in human history. That this idealistic view might cause enormous friction in societies and within organizations where huge investments need to be recovered can be observed by the recent turmoil around Sam Altman’s dismissal, subsequent hiring by Microsoft (who, of course, owns OpenAI), and “return” to its employer. The Economist even called the now obvious yet very profound split in the tech world a “fight for AI dominance” between “doomers” and “boomers.”

However this fight might end, humanity’s insatiable curiosity has propelled science and its technological applications this far. Given the reasons above, it might be time to slow down the development of artificial superintelligence at the cost of curtailing exponential growth with ethical and regulatory guidelines.