The Amazing Revival of Analog Computing

In a famous interview almost two decades ago, the well-known physicist Freeman Dyson questioned whether humans are analog or digital. Information about our genes is undoubtedly digital and coded in the four-level alphabet of DNA. It seems possible, however, that information processing in our brains is partly digital and partly analog. More than twenty years later, Dyson’s vision drives a fantastic revival of analog computing. An article published by W.I.R.E.D. in March 2023, titled ‘The Unbelievable Zombie Comeback of Analog Computing,’ concludes that a new generation of analog computers will change the computing world drastically and forever. Here is why.

From Analog to Digital Computing

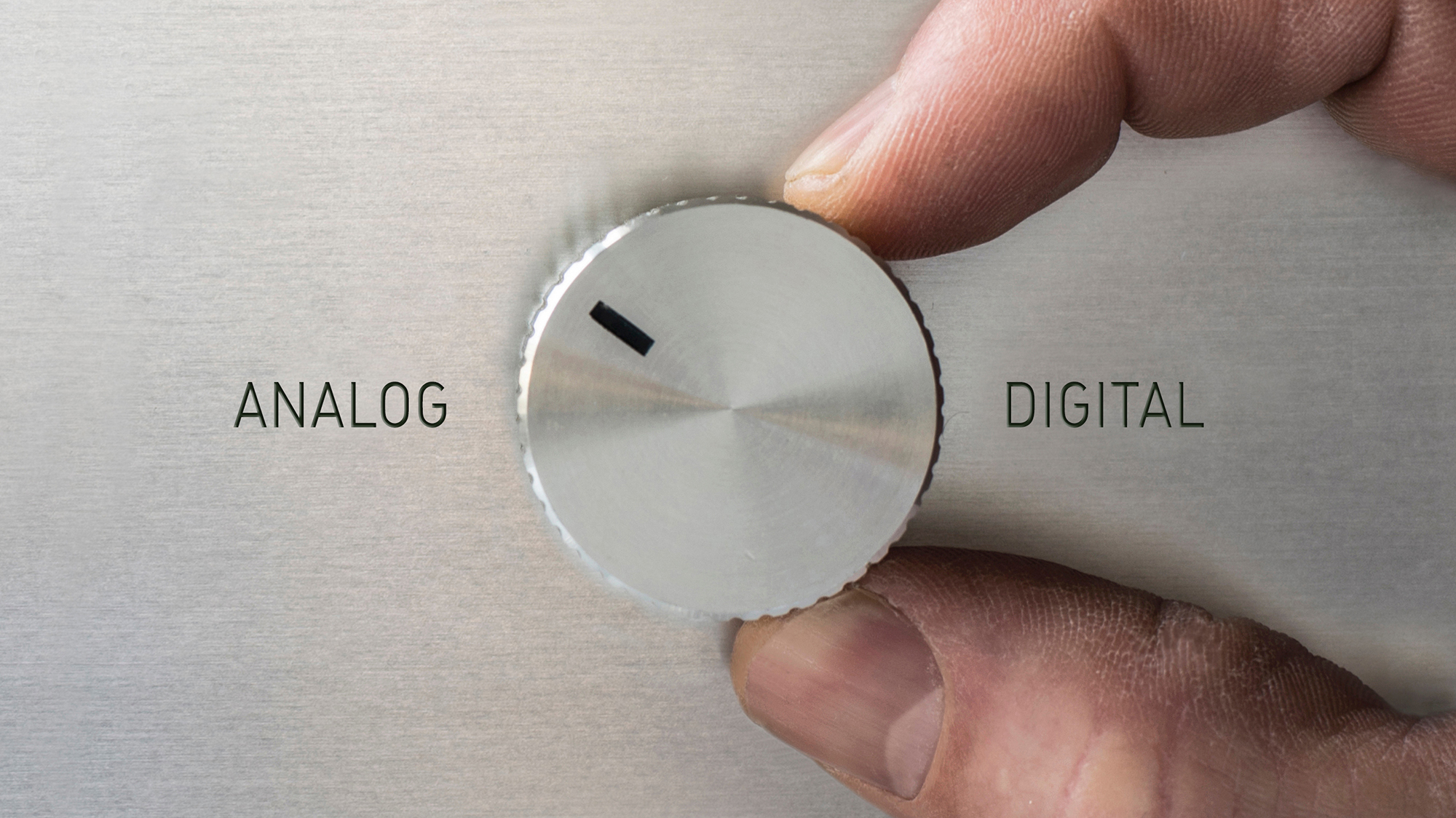

To control the rate of dosage of chemicals with a metering pump, analog computers were the first systems to be used for industrial process control. Examples of analog data for information processing are pressure, temperature, voltage and weight.

A straightforward example for comparing analog and digital functionality is the presentation of time by an analog and a digital watch. Analog represents time as a continuum, while digital converts time to a discrete step-function.

Programming an analog computer involves the transformation of a given task into the circuitry of an analog computer, which is quite different from the programming of a digital computer. Some of the typical computing elements found in analog computers are function generators, integrators, comparators and multipliers.

Due to the complexities of solving problems, digital computers have replaced analog computers. Over the last decade, digital processing has given birth to the design of artificial neural networks and deep learning to mimic human brain functionality with machine intelligence. However, to reach Artificial General Intelligence (AGI), considered the ‘Holy Grail’ of AI, there are still significant roadblocks ahead for reaching this goal:

Hardware

- Digital transistors are projected to reach their limits as Moore’s law ends.

- The ‘Von Neumann architecture’ is inefficient due to the separation of CPU and Memory Access.

- High-performance data centers rely on cloud computing and are vulnerable to cyber-attacks

Software

- Large Language Models (LLMs) are extremely data-hungry.

- Transformer models like Chat-GPT produce erroneous results due to biased and synthetic data.

- Applications of weak AI are restrained and are only available for limited tasks.

Considering the financial and human resources engaged in offering an ever-growing list of digital AI tools, it seems obvious that a potential revival of Analog Computing might disrupt the entire AI industry.

From Analog to Neuromorphic Computing

Neurons of the human brain communicate with each other via short blips of electricity. Neuroscientists call that blip ‘the spike.’ Hence, understanding the brain means understanding spikes. So-called Spiking Neural Networks (SNNs) are biological networks that define the human brain’s functionality. Neurons of an SNN transmit information only when an intrinsic quality of the neuron reaches a specific value, defined as a ‘threshold.’ When the threshold is reached, the neuron fires and generates a signal that travels to other neurons, which, in turn, increases or decreases their response to this signal.

A key aspect in engineering neuromorphic systems is to understand how the morphology of individual neurons processes information that stipulates learning, adapts to local change (plasticity) and facilitates evolutionary change. One of the first applications for neuromorphic engineering was proposed in the late 1980s by Carver Mead, Professor Emeritus of Engineering and Applied Science at the California Institute of Technology.

Neuromorphic engineering translates what we know about the brain’s functionality into neuromorphic systems.

Mead’s main interest was focused on replicating the analog nature of biological computation and the role of neurons in cognitive tasks for designing useful analog computer applications.

The Revival of Analog Computing with IBM’s Neuromorphic Chip

The design of today’s digital computers is based on the ‘Von Neumann architecture’. The central processing unit (CPU) analyses and stores data in memory. For each calculation, the computer must shuttle data back and forth between those two units, which takes time and energy.

IBM’s new brain-inspired analog chip advances the idea of neuromorphic computing to a new frontier: One day, it could power your phone and other devices with a processing efficiency that is equivalent to the human brain. IBM’s new chip maps 35 million neurons, a far cry from the 100 billion neurons the human brain contains. The chip was used for a speech recognition task to test its application potential.

The result showed an impressive sevenfold improvement in performance and a fourteen times improvement in energy consumption compared to conventional digital processors.

Although impressive, analog chips are still in their infancy. They show enormous promise for combating the sustainability problems associated with AI, but the path forward requires clearing a few more hurdles.

One of these hurdles is to improve the design of the memory technology; another is improving how the chip is physically laid out. On the software side, algorithms must be developed tailored explicitly to analog chips, capable of translating code into the language analog systems can easily process. As these chips become commercially available, developing dedicated applications will foster the vision that analog computing will one day be as successful as digital computing has been in the past.

According to an IBM patent application published in 2018, one of the most appealing attributes is their portability to low-power neuromorphic hardware, which can be deployed in mobile devices and operate at extremely low power for real-time applications. In addition, building a neuromorphic device may advance our knowledge as to how our mind works. The goal of such an endeavor is to produce a chip that can ‘learn’ from the inputs it receives for processing and solve problems far more efficiently than today’s digital AI systems.

Such an engineering effort will lead to an entirely new class of systems that can be ‘trained’ to recognize patterns with far fewer inputs compared to a digital neural network and consume considerably less energy while breaking AI’s dependency on data provided by the cloud.

Examples of Neuromorphic Design Projects

Today, several academic and commercial experiments are underway to produce neuromorphic systems. Some of these efforts are summarised as follows:

- SpiNNaker

SpiNNaker is a computer developed by Germany’s Jülich Research Centre’s Institute of Neuroscience in collaboration with the UK’s Advanced Processor Technologies Group at the University of Manchester. The “NN” in SpiNNaker is capitalized to emphasize the system’s role in testing an SNN architecture, which is especially suited for neuromorphic computing involving about 200,000 neurons. The initial goal is to simulate 10% of the cognitive intelligence of a mouse brain and 1% of the intelligence of a human brain.

- Intel Loihi

Intel is experimenting with a neuromorphic chip architecture called Loihi. Until very recently, Intel has been reluctant to share extensive details of much of Loihi’s architecture, though we now know by way of reporting by ZDNet’s Charlie Osborne that Loihi is using the same 14-nanometer chip design technology others employ as well. First announced in September 2017, Loihi’s microcode (its instructions at the chip level) includes procedures designed specifically for training biological neural networks. Compared to SpiNNaker, which explicitly describes a simulation of neuromorphic processes, Loihi is designed to be ‘brain-inspired.’

- SynSense (formerly aiCTX)

Venture capital financed Spin-Off established in 2017, following twenty years of world-leading research at the University and ETH Zürich, SynSense focuses on commercializing neuromorphic intelligence. Based on their patented analog chip design, they are currently the only neuromorphic technology company that provides both sensing and computing at the chip level. Due to the extremely low power consumption, their technology is used for ‘always-on’ surveillance and health care applications.

Outlook

Neuromorphic research goes beyond the question of whether our understanding of reasoning may be symbolized by how a digital computer stores and processes data. Scientists investigate whether analog devices can reproduce the way the brain functions.

It is likely that progress in neuroscience and the design of neuromorphic systems will eventually replicate the entire functionality of the human brain.

With a technology that requires far less power and generates substantially less heat, the performance improvements of analog computing might indeed challenge the present day’s dominance of digital computing. Big Techs have too much to lose for not taking this potential disruption seriously.