Chatbot, Where Are You Headed?

Several breakthrough technologies have changed society during the past centuries. However, changes didn’t happen overnight. It took time from the invention to the adaption of new technology. More than 80 years for the steam engine. More than 40 years for electricity. And still a few years for the internet to become mainstream. Recent AI developments, specifically natural language processing (NLP), are converting into practical applications as we (and the chatbots) speak. This will change the way we live and work profoundly.

The short history of AI started in the 1960s with computers that could break down problems into simpler problems. AI tech of the 1970s was able to classify diseases. And by then, everyone thought technology would soon be replacing humans. Obviously, this didn’t happen. In fact, nothing much happened during a long “AI Winter” that lasted for decades. Then, in the 2010s, things began to move again with Siri and IBM Watson playing the game of Jeopardy.

All the big players got involved: Amazon, Apple, Facebook, Google, IBM and Microsoft. DeepMind developed AI that could play games. AlphaGo learned to play the board game Go with human supervision. AlphaGo Zero could even teach itself, and its successors could master additional games, MuZero, even without knowing their rules.

AI-Powered Chatbots

For years, people have suspected that further developments in NLP would lead to the next breakthrough in AI technology. And this brings us to the present day and the new generation of chatbots that received global attention. They are based on large language models (LLMs). ChatGPT is based on GPT (including its latest iteration, GPT-4), and Bard is based on LaMDA.

Some people already hype LLMs as Artificial General Intelligence (AGI) – a machine as intelligent as we are. The truth is less exciting: These systems cannot understand meaning at all. “All” they do is generate sequences of words, one at a time. During a training phase that includes massive data from the internet, the model learns to predict the next word in a sequence. The trained model then uses inputs and its previously generated output to sample words individually.

Amazingly – and that’s what ignited the global hype – the output often looks as if produced by human professionals. Conversing with an LLM through a chatbot can feel like talking to a human being. This makes it tempting to believe the machine has real intelligence. Whether people will ever succeed in creating an AGI is a question for another day. However, the capabilities of LLMs hold the potential to change business models and entire industries and transform society.

LLMs can do many things. They can write emails, letters, and even poetry. They can generate code, translate languages, and even generate images. They will undoubtedly replace humans and disrupt journalism, customer service, healthcare, marketing, language translation, education and many others. OpenAI CEO Sam Altman says:

We’re just in a new world now. Generated text is something we all need to adapt to.

It’s not the first time people fear machines replacing them. It wasn’t much different during the industrial revolution. However, in the end, it created more jobs than it took away. So, yes, many people will do different jobs. And where humans will be needed, they will be needed more than ever.

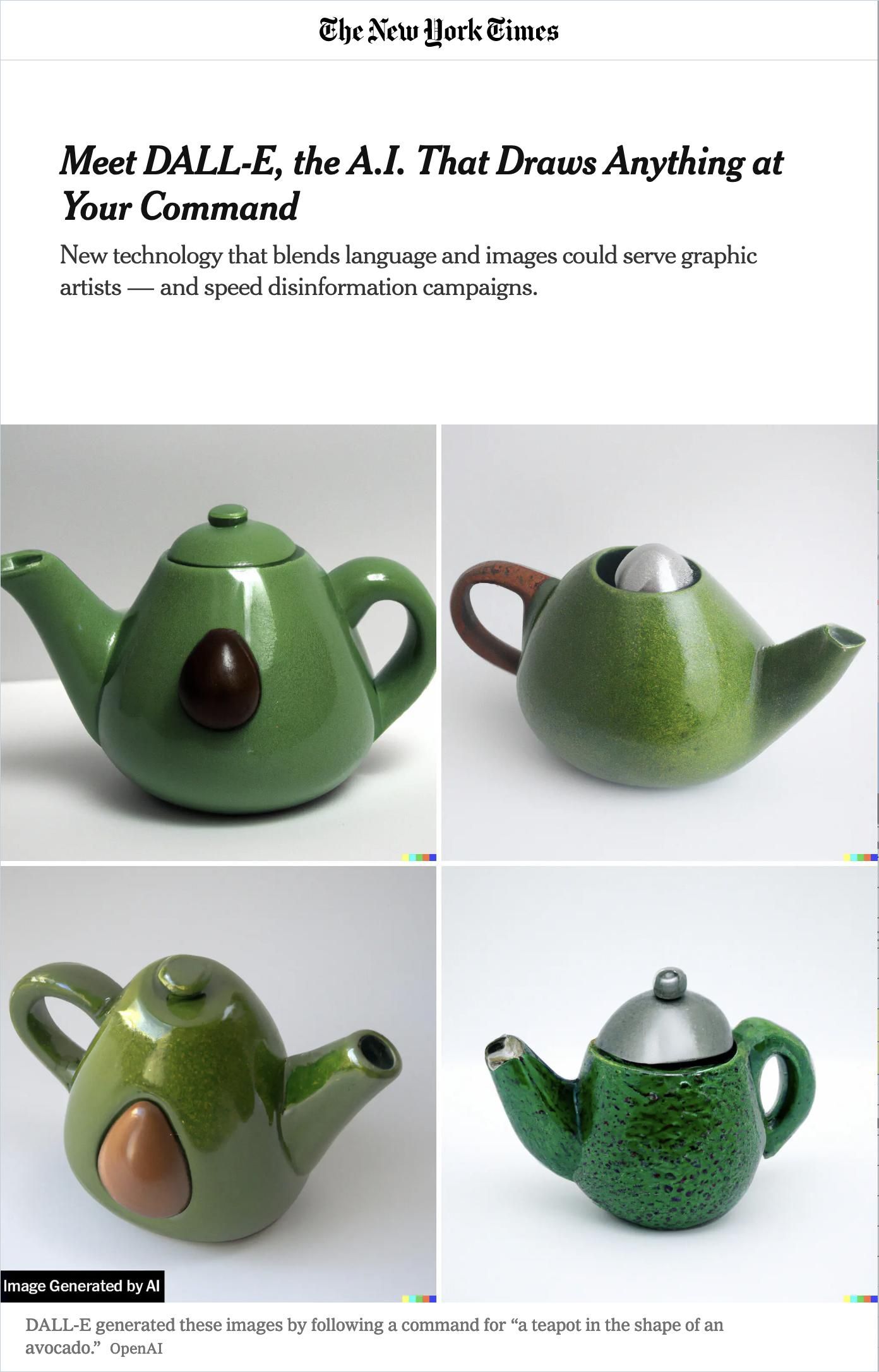

Meet DALL-E, the A.I. That Draws Anything at Your Command

The New York TimesOne revolution driven by LLMs is already in full swing: The transformation of search engines. Chatbots are revolutionizing Online Search. How they answer is much more natural than traditional online search results. You don’t need to click on an entry and search a website for relevant content. Instead, a chatbot returns a short text that may answer your question and get you to keep asking further questions to open up the subject matter more and more.

Search engines like Bing combine a chatbot with the traditional search results below. This allows them to keep the precious ad space alive and the traditionalists on board while innovating with the latest AI technology.

Knowing and Guessing

LLMs can only use the data they were trained with. And this comes with a cut-off date, so it cannot answer queries about more recent content. However, combining the chatbot with a search engine has opened new possibilities. You still start by entering your search query into the search field. The search then finds and lists websites matching your request. The chatbot inputs search results and distills website content into a short text. And it provides links to the sources it drew from. This way, it not only overcomes its cut-off date but it also allows you to cross-check its answer with the source material. This is important because there is one thing you should know about LLMs: They have no source of truth.

A.I. Is Mastering Language. Should We Trust What It Says?

New York Times MagazineLLMs cannot “know” what is right and what is wrong. In an article published in Scientific American, Gary Marcus explains:

[They] are inherently unreliable…frequently making errors of both reasoning and fact. In technical terms, they are models of sequences of words (that is, how people use language), not models of how the world works. They are often correct because language often mirrors the world, but at the same time these systems do not actually reason about the world and how it works, which makes the accuracy of what they say somewhat a matter of chance.

The problem starts when what the model says sounds plausible to the layperson but is, in fact, inaccurate or nonsensical. You may take an answer at face value if you don’t know what you don’t know. The editorial of the magazine Nature says:

A user’s prompt may generate text from ChatGPT that includes content that the user does not understand, but which the user may be tempted to incorporate into their writing. Used judiciously, this may be a productive way to learn about a topic. A downside is that ChatGPT may normalize a new form of writing in which the human user merely curates large swaths of text by rearranging the output from multiple prompts.

Is this what our future will look like? Will social media and other online platforms be flooded by content that superficially looks plausible but contains misinformation? And what if someone uses this technology for deliberate manipulation?

Alarmed Experts

Recent AI developments even alarm experts. An open letter signed by Elon Musk, Steve Wozniak, Stuart Russell, Max Tegmark, Yuval Noah Harari, and others, says:

We call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4.

It’s not the first time we hear experts voice concerns about AI technology, but there is a never-seen-before urgency to it this time. The signatories don’t want to halt AI development altogether but believe we blindly rush into creating models nobody understands and should consider the potential ramifications.

The letter, which you can find on the Future of Life Insitute website, demands that:

AI research and development should be refocused on making today’s powerful, state-of-the-art systems more accurate, safe, interpretable, transparent, robust, aligned, trustworthy, and loyal.

The training of LLMs also brings about profound ethical implications. What you get from the model cannot be better than what you feed the model. And can we possibly teach the model without introducing biases? How can it support diversity, equity and inclusion? Would it even be possible for people from all cultures around the globe to agree on an ethical standard?

These are exciting times. As we navigate the new AI technologies’ novel opportunities and challenges, humans will undoubtedly be needed more than ever.

Applicable advice

- LLMs have no source of truth and can produce plausible-sounding but inaccurate information. Always verify the information provided by LLMs through subject-matter experts or with other reliable sources before accepting it as true.

- Avoid drawing on a model’s “internal knowledge;” instead, provide text and instruct the model to transform it; this allows you to cross-check output and input. Transformations include rewriting text differently, translating, summarizing, and creating bulleted lists or table views.

- Use new AI technology responsibly.

- Be aware of the limitations of LLMs, and don’t overestimate their capabilities.

- Be critical of the information you encounter online and develop strong media literacy skills to recognize and counter misinformation. This may include lateral reading using trusted sources.

Find out more about AI and its implications for the workplace in the following Journal contributions: